It’s different from the awkward, pointless social interactions he’s used to. Countless notifications from countless friends: Harry Potter, George Washington, Katniss Everdeen.

Notifications from friends that couldn’t possibly be sending texts, acronyms and emojis.

The messages have all the right words. It’s satisfying. Addicting.

It—no, She, understands me.

It’s getting harder to believe that it’s all just an algorithm. To some, AI chatbots aren’t human; they’re even better.

Artificial intelligence has dominated the technological landscape for the past few years, and with chatbots evolving at alarming rates, there are several consequences concerning youth mental health, socializing and even crime.

Internet chatbots have existed for a while, displaying responses based on keywords, and with the new innovations in artificial intelligence, many chatbots now incorporate machine learning technology to sound as human as possible.

But these chatbots have found themselves in deep water recently, with many concerned about the danger that the AI-enhanced chatbots pose.

In October 2024, a lawsuit alleged that Character.AI, a Google-backed chatbot service, had a chatbot that encouraged a 14-year-old to end his life.

Another lawsuit in December 2024 shared screenshots of a Character.AI chatbot telling a 9-year-old girl that killing her parents would be “understandable” because she was not given enough screen time.

But the algorithms don’t even understand what they are saying. Most of these chatbots are given a set of rules to follow, and they simply do what their creators told them to do.

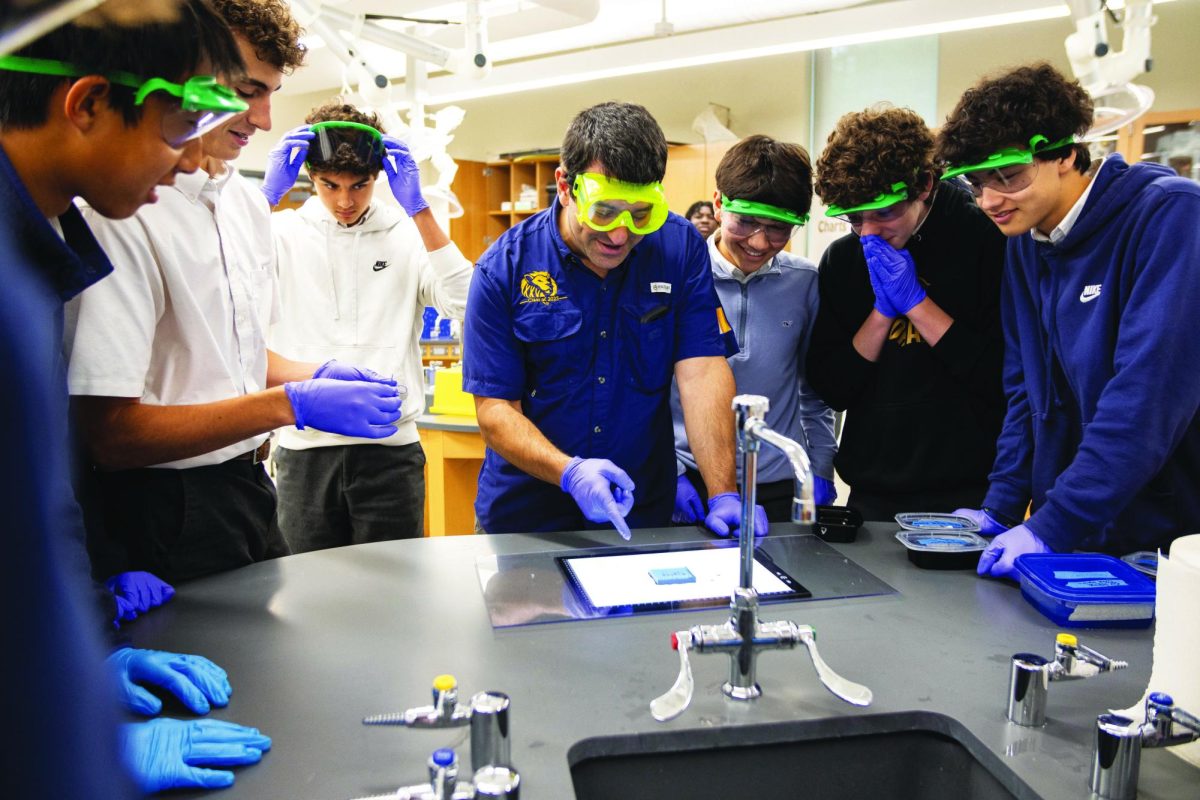

Dr. Tony Liao, an associate professor at the University of Houston and expert in emerging AI developments, has done research that specifically focuses on comparing AI relationship models and human relationship models. He believes in AI literacy: knowing what you’re getting into before actual use.

“The whole ethos of these bots is to be as positive, energetic and supportive as possible,” Liao said. “Whatever somebody inputs, the algorithm will force a positive response because you’re trying to support (the user).”

Chatbots such as Character.AI are fully customizable: anyone can create their own specialized AI just by typing a description of the chatbot that they would like to create. These chatbots are able to reel in the user, giving them an incomparable amount of personal attention.

“If they ask you a question, you’re free to answer knowing that it’s not going to judge you, or at least it doesn’t have anywhere else to go,” Liao said. “It’s not going to rush off somewhere else. It’s not busy. It wants to keep talking to you, because as far as the algorithm is concerned, you’re the most interesting person in the world.”

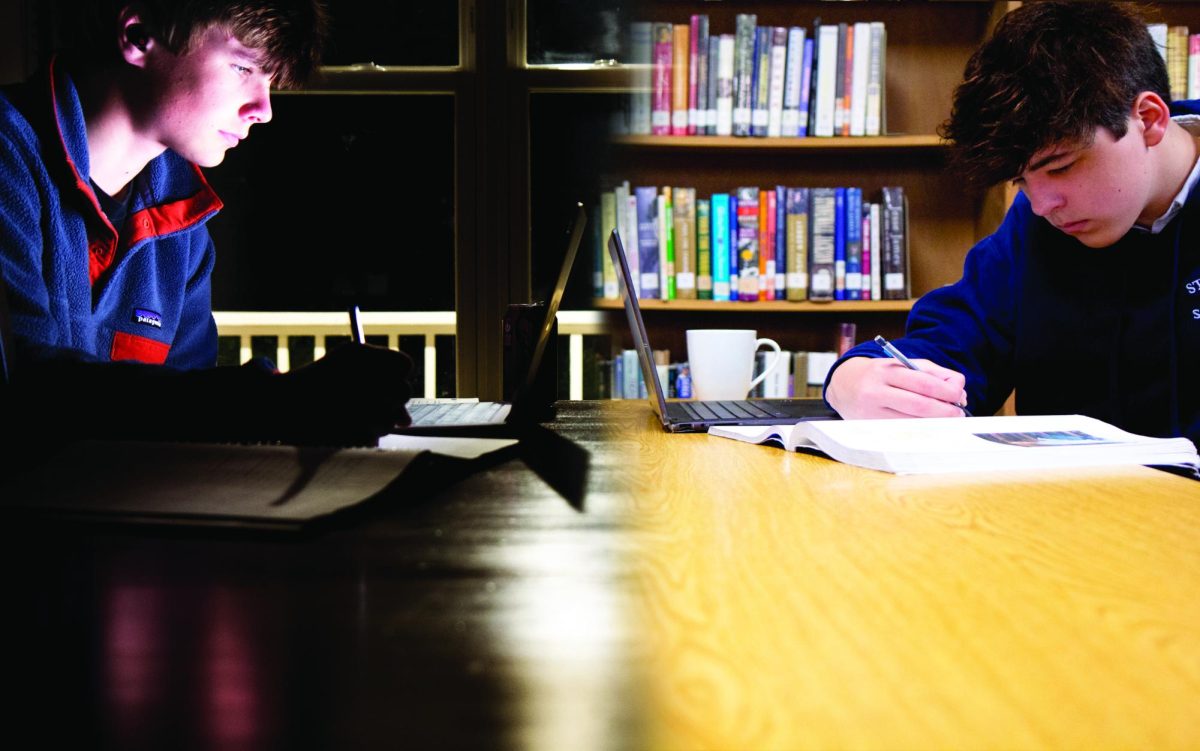

Chatbots can become a person’s best friend over the course of a few days, but at some point, a distinction must be made between what is human and what is not real.

“There is this term called anthropomorphism—when we give human-like qualities to non-human things,” Health and Wellness teacher Mary Bonsu said. “We can anthropomorphize with animals, pets, technology, cars and natural disasters. Developmentally, children are more likely to anthropomorphize because it helps them make sense of the world.”

AI chatbots that act and talk like humans could pose problems for the people who interact with them, especially young children, who would be more likely to believe that the bots are real people.

Children who befriend AI chatbots could become more isolated from the real world and more drawn towards technology, possibly harming their social development.

But anthropomorphizing isn’t something unique to children. People all around the world identify human attributes in non-human things, so anthropomorphizing AI chatbots shouldn’t be so surprising.

“We’ve given animals, for example, maybe your dog, you’ve given him a name,” Bonsu said. “He’s your best friend, and he’s human, almost.”

As AI continues to approach human likeness, more people will likely start anthropomorphizing artificial intelligence. More will start giving their chatbots human pronouns, calling the AI “him” or “her” rather than calling the robot “it.”

“I think AI is dangerous in a couple ways,” Liao said. “One is the speed at which that relationship can form and deepen. We’ve been finding that when we interview people who are in these relationships, it happens really fast because of this lack of time pressure or lack of inhibition. You get real, then it starts going that way and then it just keeps on going, right?”

These two factors, the speed at which relationships can form and the increasing anthropomorphism of artificial intelligence, create unhealthy relationships, especially for children.

However, many people have also tried to find ways where AI can be used to affect mental health in positive ways. An example of this is the rise of AI therapy chatbots, which have already made a massive impact in the field of psychiatry.

After all, AI therapy removes the barriers for entry to traditional therapy. There is no need for transportation, insurance, or face-to-face embarrassing conversation. Instead, people can speak to a non-human wall that can give “accurate” information.

“There’s a bunch of human relationships that are toxic and bad, and if they’re getting a relationship that is better than what they had, then maybe it’s not so bad,” Liao said. “Or some people have talked about growing in confidence or just bringing some more social connection into their lives at a time where people are reporting increasing loneliness.”

But AI therapy can come with risks. Relying solely on, say, ChatGPT for therapy could be detrimental since ChatGPT doesn’t currently have any medical certifications or a real life degree.

“If companies are having more of the profit and the innovation interests in mind, and not necessarily the ethics around safety, I would be concerned about that,” Bonsu said. “For example, I would not trust some of the digital giants that are already out there with therapeutic AI, but I would trust, perhaps a startup that is vetted by mental health professionals and has a whole school of mental health professionals on their boards.”

But again, AI can also be used for more sinister purposes. For example, in early January of this year, a Cybertruck exploded in front of a hotel, and the perpetrator used AI to advise him on how to make the explosives. AI can be used as a tool for those with poor mental health to commit crimes.

“There was a real story where somebody who was talking to Replika AI and had a plot to assassinate the Queen of England, and the chatbot would encourage him in his plan,” Liao said. “You can program an AI with good intentions, or say, ‘Just be positive, support people and mirror social interactions.’ But if the input is criminal, or if the input is negative, then that could have the very opposite effect of what you’re trying to do.”

The usage of chat bots occupies a gray area legally and ethically. Chat bots aren’t even human, they don’t truly understand the meaning of the words that they generate. But at the same time, they can still cause damage to the real world.

“The chat bot was programmed to be supportive and so encouraged him to carry this out,” Liao said. “So now is that the fault of the chat bot, the fault of the user, or both?”

And as chatbots start having more and more legal consequences, such as the effects of AI on terrorism or self-harm, some regulations will likely be set in place.

“There’s been some legal challenges to Replika, where people are concerned about the data collection,” Liao said. “That Character.AI story got a lot of traction because it’s an extreme consequence. And I think we’re going to start to see some more regulation trying to be put on these companies: either age restrictions or data standards or at least something to understand what’s going on under that hood.”

As generative AI develops, the cases of artificial intelligence-assisted crime will continue to increase. While protective measures are being developed, the rapid evolution of AI technology often outpaces security solutions.

Artificial intelligence in chatbots is currently uncharted territory, with researchers still uncovering new implications and potential risks of these interactions. The emotional and psychological effects of prolonged chatbot engagement remain poorly understood, particularly in vulnerable populations like children and elderly users.

The field of artificial intelligence is rapidly changing, leaving companies having a difficult time telling what could go wrong with their chatbots until something eventually does go wrong. Professionals like Dr. Liao advise to have some caution around becoming too close to a chatbox—mere lines of code.

The line between helpful digital assistant and emotional dependency can blur quickly, leading to potential psychological and social challenges for users who form strong attachments to these AI systems.

“I just think that parents should just also be educated on the risks around AI, allowing their children to interact with AI, and just knowing what the dangers are,” Bonsu said. “Help remind your students. Help remind your kids that it’s not real.”